From code generation to prototyping, AI is shaking up the world of app development.

These days, coders and non-coders have access to an expanding ecosystem of AI-powered tools: IDEs with built-in AI capabilities, AI-powered code editors, visual development tools with embedded AI features, and even AI agents orchestrating work across different platforms.

It is undeniable that AI is transforming many aspects of app development, and the models are getting smarter every day. However, the reality is more nuanced: its helpfulness is still somewhat limited, especially for non-coders.

In this blog post, we explore the potential and pitfalls of AI in app development, focusing on the challenges that non-coders face and potential solutions for overcoming current limitations.

Let’s dive in.

AI can significantly accelerate the initial phases of app development by generating prototypes and foundational scaffolds from high-level requirements, including frameworks, wireframes and templates.

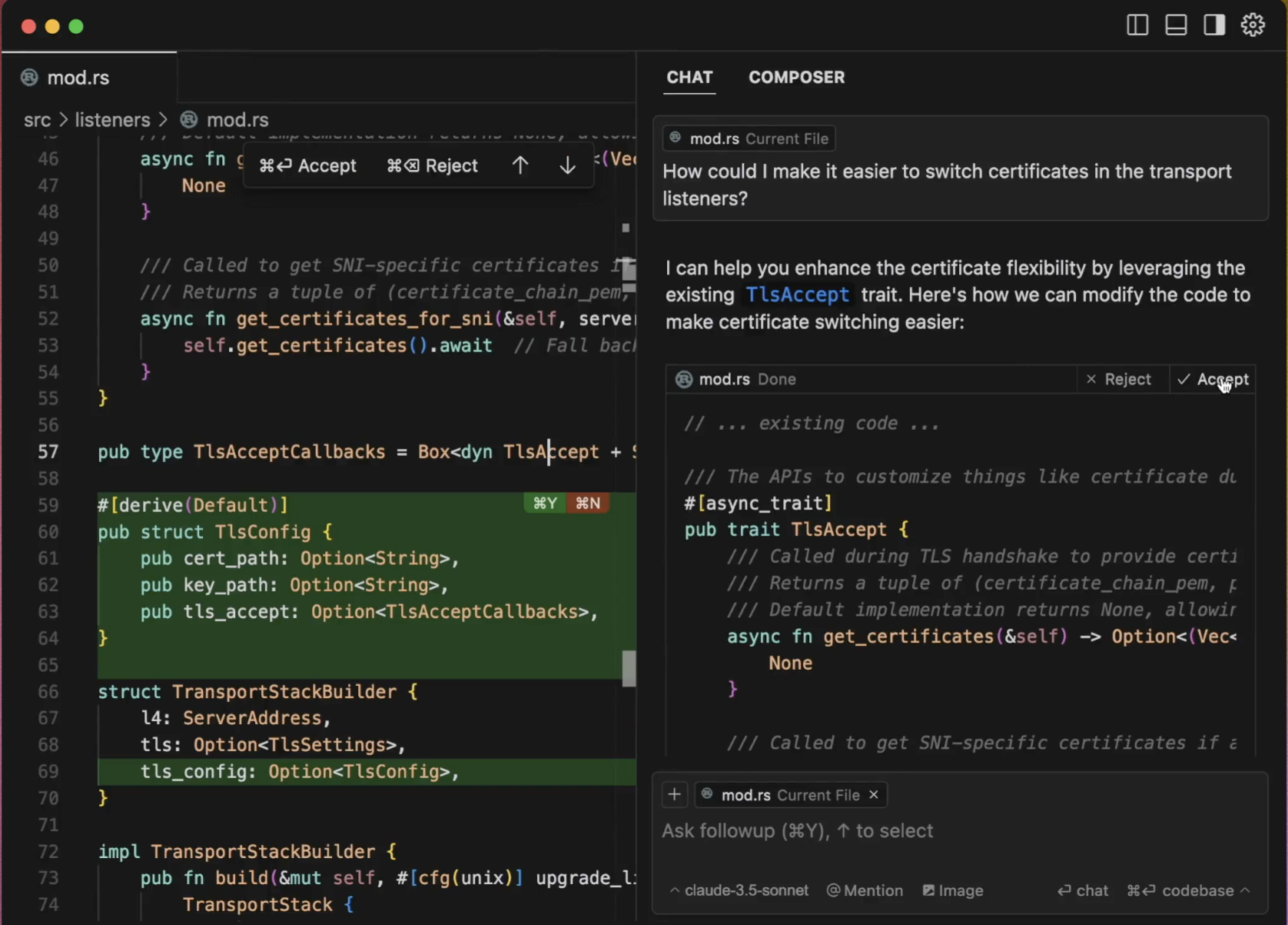

AI-powered coding assistants can boost efficiency in mundane coding problems and provide helpful tips and hints during the development process. Some tools even show promise in converting designs from tools like Figma and Framer into usable HTML and CSS components.

However, despite these impressive capabilities, AI still falls short across many other crucial stages of the app development lifecycle.

"AI app builders work nicely for crafting quick proof-of-concepts. But they're definitely not yet a replacement for humans who devote time and effort to creating polished, high-quality, 100% working apps." - Jon Adair, CTO at Curated Capital

AI struggles with customization and control. Yes, you can prompt your way and generate code, but you will often end up with a large, messy and unoptimized codebase that requires manual debugging and re-prompting for consistency.

However, for non-coders, making modifications through prompts only can be frustrating. Small changes often require multiple attempts and can inadvertently affect other parts of the code or even compromise the entire structure.

In other words, initial prototyping usually comes easy, subsequent edits – not so much.

"I do like the speed AI gives me, but I prefer to have control over the app I'm building. With AI, I can generate the code and maybe even edit it slightly. But if I want to move a box or apply animation, that would require heavy prompting and lots of back and forth. I often feel that I don't have the control to make manual adjustments where necessary." - Anthony Smith, Founder at Corporate Synergy Solutions

Solution: Blending AI-assisted generation with manual adjustments for optimal flexibility and control. For example, WeWeb users can make design or logic adjustments in two ways. They can prompt WeWeb AI to make iterations until they're happy with the results or use the visual programming interface to make manual adjustments.

Where AI fails to deliver, humans need to have the option to step in and make the adjustments directly.

AI models operate as a black-box. Lack of transparency and control over outputs lead to frustration, misaligned expectations and hallucinations — incorrect or implausible outputs that can lead to broken code and make debugging difficult.

And it goes beyond app development: hallucinations are making headlines across industries.

“In my experience, hallucinations can break so many things. Style, components and even the entire working software. It's extremely unpredictable, and guarding against those kinds of issues is a hard problem to solve.” - Rokas Jurkenas, Founder at Idea Link

Solution: App development tools need to implement mechanisms to manage and refine AI-generated outputs. One solution could be to add features for automated testing and error detection in outputs before deployment. Another is to use models that enable better context retention for iterative tasks and reduce hallucinations.

"Something that I noticed when working with different AI tools on design and styling is that the generated outputs tend to be inconsistent, for example, paddings and margins. It seems that tools currently lack the ability to reference existing design systems and already-defined parameters to maintain consistency across the entire project. Every new design task feels like starting from scratch." - Vikrant Rana, WeWeb & low-code developer

AI models can generate designs that lack consistency and fail to integrate user-defined parameters like paddings, margins or typographies. They can also struggle with hierarchy, component organization and learning and applying company-specific design systems and styles.

Solution: AI-powered app development tools should be able to learn, reference, and adapt to user-defined design systems and maintain coherence across projects. Emphasis should be on building systems that are customizable and provide users with multiple design iterations to select from.

The wider the context, the harder it is for AI models to keep track of what they’ve been originally working on. This is an LLM-wide issue where models can become overwhelmed with vast amounts of contextual data and struggle to pinpoint specifics in the sea of information.

As a result, you’ll often find yourself prompting the model back to the initial scope of the task.

“I often see AI losing context in long conversations across various platforms and forgetting what it's been originally working on. It feels like I constantly need to remind a model of the initial scope. What I want to do to solve this is to create crystal clear prompt templates that will remind the model throughout the work that this is the task at hand, and this is the feedback.” - Tessa Kriesel, DevRel leader

Solution: One solution that platforms can leverage is to integrate more context-aware LLMs into the workflow For example, with WeWeb, users can give formulas, workflows, and component data as context to the AI model, an approach that has proved to be quite effective.

A good indicator for a model's context awareness can be a needle in the haystack test that evaluates how effectively a model can retrieve specific information from a large amount of data without getting distracted by the surrounding hay. Some models score better than others.

When it comes to debugging and adjustments, AI-generated outputs often leave users unsure of what changes were made or why. This lack of control and visibility makes it hard to understand, edit and revert changes if needed, widening the gap between user intent and AI execution.

Solution: User-friendly interface for reviewing and refining AI-generated outputs, along with a way to inform users of any changes. In addition, users should be able to test, refine, and edit AI-generated changes.

For example, at WeWeb we recently introduced the Task Manager to give users more transparency and control over workflow implementation.

When a user creates something with WeWeb AI that has workflows associated with it, WeWeb AI will not automatically implement those workflows. Instead, it will put them into a Task Manager and let users choose which workflows they want to execute and which to dismiss.

AI-generated outputs are heavily reliant on the quality of prompts. Crafting the right prompt is an art in itself and it can be time-consuming since there is no one formula to rule them all. You may need to do excessive prompting and re-prompting to achieve the desired output, especially for more complex functionalities.

Furthermore, re-prompting and adjusting content or functionality can lead to unpredictable results.

"When it comes to adjusting or adding smaller details, a lot of it comes down to how you build prompts, and that can take a lot of time. You need to learn how to use different terminology and describe what you want to achieve in a way that is clear to the AI model." - Alexander Sprogis, Co-founder at VisualMakers

Solution: App development tools need to assist with interactive prompts, visualizations, and clear templates to help users navigate conversations with AI and get the desired output more easily.

“A survey by Snyk, a cybersecurity firm, found that more than half of organizations said they had discovered security issues with poor AI-generated code.”

The privacy of data, stability, and architectural robustness of AI-driven solutions, as well as potential vulnerabilities in generated code, are top of mind for those who are developing apps. The lack of QA mechanisms for verifying AI-generated code functionality doesn’t make things easier.

“When speaking with companies that are looking to lean into no-code/low-code and AI, some of the main concerns that come up are security and data privacy. There is a lack of trust, especially when it comes to AI agents. For enterprises — while cost and speed are important — ensuring their products are secure, resilient and compliant is still the main focus.” - Paul Clarke, Founder at Fabrik Labs

Solution: Ensuring transparency, flexibility and control in the development process is crucial for both developers and non-technical users. App development tools should provide ways to audit, test and ensure compliance.

While AI has shown incredible capabilities when it comes to app development, huge amounts of know-how are required to string pieces of an app together and check that it works.

Building applications requires creativity, judgment, technical expertise, an understanding of user needs, and a human's ability to comprehend broad contexts and synthesize years of experience into nuanced decision-making. And this is something that AI has yet to fully grasp.

At WeWeb, we believe the key to giving non-coders the best and most out of AI is to combine it with a visual interface that allows humans to remain in control and step in when necessary.

Sign up now, pay when you're ready to publish.